Abstract

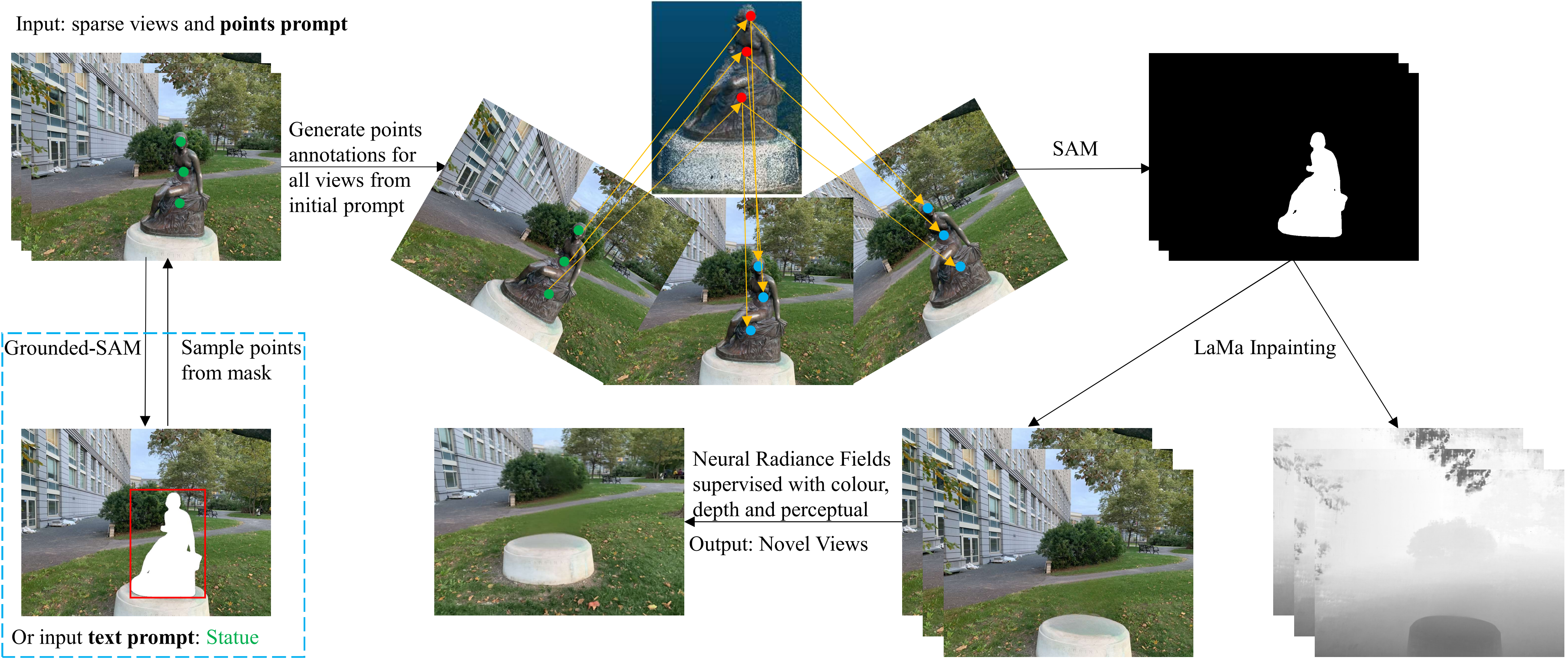

The emergence of Neural Radiance Fields (NeRF) for novel view synthesis has led to increased interest in 3D scene editing. One important task in editing is removing objects from a scene while ensuring visual reasonability and multiview consistency. However, current methods face challenges such as time-consuming object labelling, limited capability to remove specific targets, and compromised rendering quality after removal. This paper proposes a novel object-removing pipeline, named OR-NeRF, that can remove objects from 3D scenes with either point or text prompts on a single view, achieving better performance in less time than previous works. Our method uses a points projection strategy to rapidly spread user annotations to all views, significantly reducing the processing burden. This algorithm allows us to leverage the recent 2D segmentation model Segment-Anything (SAM) to predict masks with improved precision and efficiency. Additionally, we obtain colour and depth priors through 2D inpainting methods. Finally, our algorithm employs depth supervision and perceptual loss for scene reconstruction to maintain consistency in geometry and appearance after object removal. Experimental results demonstrate that our method achieves better editing quality with less time than previous works, considering both quality and quantity.